🤫 What is Whisper? #

In this post, we see how you can start creating Whisper video captions on your local machine, without a GPU. Before we turn to that, there are a few terms to unwrap in there! Closed captions are the text transcriptions which appear superimposed on videos. They are fantastic for accessibility, and you should always try to include them. You will doubtless be aware of recent advances in open source artificial intelligence (AI). ChatGPT has recently stolen the spotlight, but before that we had the DALL-E, Midjourney, and Stable Diffusion AI image generation tools. With so much hype surrounding those, you might have missed Whisper.

Whisper from OpenAI generates transcriptions from spoken audio in English and other languages. You use Whisper’s trained language models for transcription and can even translate audio in one language to a transcription in another. Whisper represents a step change in speed and fidelity compared to other contemporary models. YouTube offers a free service, which to honest I thought was marvellous. That was until I tried Whisper! All models will get the odd word wrong, especially in an obscure context. I noticed Whisper did this far less frequently than YouTube. On top, for longer videos, I often had a long wait for the YouTube closed caption track to be generated.

Where do GPUs come into it? #

It turns out GPUs, the tech for generating realistic graphics on your games console, are also pretty good at machine learning tasks. Training machine learning models as well as running them on a GPU can drastically cut the time needed for these tasks, compared to a CPU (the traditional principal processor in computers).

You do not need to buy an expensive GPU just to run your transcription! Services like Paperspace offer (free and paid) GPU compute. This lets you run the Whisper Python model , for example, in web-based Jupyter notebooks. That is exactly how I first used Whisper. However, we will see, if your audio is not too long (or alternatively, you are patient) you can run C++ code locally on your CPU. If you want to see how, read on!

🖥️ Running Whisper C++ on your local CPU #

whisper.cpp is a lightweight C++ implementation, by Georgi Gerganov, of the original Whisper Python model. It is optimized to run on Apple Silicon processors, but also runs on Intel processors. The app is CPU intensive, so not ideal for running on your 15-year-old laptop, already on its last legs! To follow this guide, you need to be comfortable running code in the Terminal. It is not essential that you know C++ but some previous experience compiling C++ code would help make setting things up a little easier.

🧱 What are we Building? #

We will construct a pipeline for transforming MP4 video into Web Video Text Track closed caption files (WebVTT also VTT). You can upload VTT to YouTube, Mux or other services along with your video.

The steps we are going to follow:

- Convert MP4 video into MP3 audio.

- Convert the MP3 audio into wav (whisper.cpp currently only supports wav input).

- Use whisper.cpp to generate a VTT file.

- Manually correct the generated VTT file.

-

This step is for convenience; you will run your manual corrections (in

the final step) against the MP3 generated here. We generate a wav file

in the next step though for whisper.cpp to use as its input.

video.mp4is the filename for our original video in MP4 format andvideo-audio.mp3is an audio track in MP3 format which FFmpeg generates for us. -

At the time of writing, whisper.cpp only accepts 16k bitrate, mono (as

opposed to stereo) audio input. This is a little more restrictive than

the Python Whisper model. However, conversion is straightforward from

the Terminal using FFmpeg.

video-audio.mp3is the input MP3 audio file andvideo-audio.wavis the output wav which FFmpeg generates for us. -

Generate captions with whisper.cpp.

./bin/main -f video-audio.wav -ovtt \-m ../models/ggml-medium.en.bin

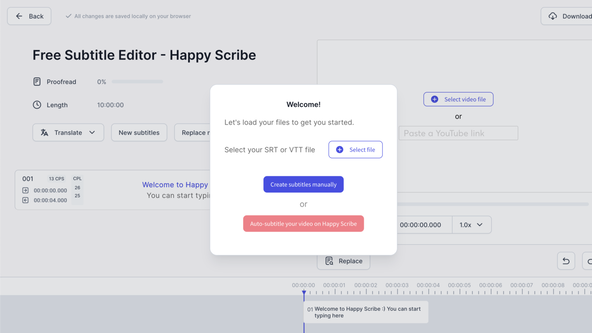

-ovtttells the whisper.cpp app we want VTT output, and the-mflag tells the app which model to use. Update the path here if you downloaded a different model earlier. whisper.cpp will output ajfk.wav.vttfile with the captions. You will need this in the next step. - The whisper.cpp output VTT will typically need some manual corrections, like correcting some name spellings, as an example. Happy Scribe is a free, online tool which syncs the VTT track to the playback, making manual corrections a breeze. Add your VTT file using the dialogue which appears when the page first loads. You will see it loaded in the bottom half of the screen with timestamps. This is the editor. Next, in the top half, click Select video file. This will accept MP3. The editor is fairly intuitive. If you click a caption, playback jumps automatically to that point in the track. When you are happy with edits, click the download icon in the top right corner, and you can save the corrected captions in SRT or VTT format.

- that whisper.cpp is an alternative to Whisper Python and can run without a GPU

- how to compile and build whisper.cpp

- how you can use Happy Scribe manually to correct AI generated captions

Do you have to run Whisper AI transcription on a GPU? #

- GPUs are often utilized for machine learning processing tasks. That is, besides their original intended use of creating realistic representations of the real world on our games consoles and computers. GPUs are expensive and instead of buying one outright, you can buy GPU compute time from a cloud service provider. For running Whisper transcriptions, there is another option. The whisper.cpp project offers a C++ optimized version of Whisper which can run on a regular CPU (the main processor in your computer). We have seen that if you are already familiar with using the Terminal, it is not too difficult to run the transcription on your local machine. That is, without having to buy a GPU or pay to use GPU compute time with a cloud provider.

What tools are there for editing or correcting video captions? #

- Happy Scribe offers a free service for editing video captions. You can run it in your browser and the controls are intuitive. You can edit captions, while watching synced video play back in the same view. Also, when you click or edit a caption in the editor, playback jumps to the corresponding point in your video. Finally, Happy Scribe offers tips on keeping captions readable, letting you know about very long lines or displaying too many characters to be read in the time the caption is displayed.

Can you use FFmpeg to convert MP4 video to MP3 audio only? #

- We saw you can install FFmpeg on macOS via Homebrew. Once installed, running the kind of conversion we just mentioned is straightforward from the command line. `ffmpeg -i video.mp4 -vn -codec:a libmp3lame -q 2 video-audio.mp3` will do the job. Replace `video.mp4` with the path to your input video. The generated MP3 will be available at `video-audio.mp3`

⚙️ Compiling whisper.cpp #

Before you can use whisper.cpp, you need to clone the repo and compile the C++ code into a binary. We use CMake to help build the binary. CMake is cross-platform tooling useful when working with C++. It generates a make file , setting compiler paths for any third-party libraries. On macOS, you can install CMake with Homebrew. We will also need to have FFmpeg installed locally, so let’s feed two birds with one scone!

brew install cmake ffmpeg

Then we can clone the whisper.cpp repo and build and compile the app from the Terminal:

git clone https://github.com/ggerganov/whisper.cpp.gitcd whisper.cppmkdir buildcd buildcmake ..make

If all went well, the executable app file will be at build/bin/main. You can keep it there, while you are still testing and playing around.

Model Download #

Whisper itself has various models. Some are ported to whisper.cpp. These

models differ in their sophistication and the languages they specialize in.

For English, I found the medium model (medium.en) is very accurate and also runs in reasonable time. If you need something

quicker but potentially less accurate, try the small or tiny models. We need

to download the model a priori. To download medium.en run:

sh ../models/download-ggml-model.sh medium.en

Open up the models directory of the repo to see

a full list of available models. That’s all the setup out of the way, so

next we can see how to create the captions.

☑️ Creating and Correcting the Whisper Captions #

Creating Whisper Video Captions #

🗳 Poll #

🙌🏽 Creating Whisper Video Captions: Wrapping Up #

In this post, had a brief look at how to get going on creating Whisper video captions. In particular, we saw :

We skimmed over some detail in the post, so please let me know if any aspect could do with more clarification.

🏁 Creating Whisper Video Captions: Summary #

🙏🏽 Creating Whisper Video Captions: Feedback #

Have you found the post useful? Would you prefer to see posts on another topic instead? Get in touch with ideas for new posts. Also, if you like my writing style, get in touch if I can write some posts for your company site on a consultancy basis. Read on to find ways to get in touch, further below. If you want to support posts similar to this one and can spare a few dollars, euros or pounds, please consider supporting me through Buy me a Coffee.

😲 Just amazed by the quality and speed of Whisper transcriptions.

— Rodney (@askRodney) February 15, 2023

Dropped a guide on how you can create and correct video closed caption VTT files locally with Whisper and without a GPU.

Hope you find it useful!

#a11y #openai #askRodneyhttps://t.co/VZW3izNDgN

Finally, feel free to share the post on your social media accounts for all your followers who will find it useful. As well as leaving a comment below, you can get in touch via @askRodney on Twitter, @rodney@toot.community on Mastodon and also the #rodney Element Matrix room. Also, see further ways to get in touch with Rodney Lab. I post regularly on Astro as well as C++. Also, subscribe to the newsletter to keep up-to-date with our latest projects.